Multiple Choice Examination System

2. Online Quizzes for General Chemistry

Horea Iustin NAŞCU, Lorentz JÄNTSCHI

Technical University of Cluj-Napoca, Romania

http://chimie.utcluj.ro/~nascu, http://lori.academicdirect.org

Abstract

The paper presents the main aspects and implementation of an online multiple choice examination system with general chemistry issues for student evaluation. The testing system was used to generate items for a multiple-choice examination for first year undergraduate students in Material Engineering and Environmental Engineering from Technical University of Cluj-Napoca, Romania, which all attend the same General Chemistry course.

Keywords

Online Evaluation, Students Evaluation, Multiple Choice Examination, Databases

Introduction

The student evaluation at the end of a semester usually comprises all discussed subjects on course, laboratory, seminars, and projects. When the area of subjects is large, as in general chemistry, a practical idea is to create a multiple choice examination system. The practice is prevalent because multiple-choice examinations provide a relative easy way to test students on a large number of topics. More, for large number of students, a classical evaluation system (written evaluation) consumes a considerable amount of time.

Using of a multiple-choice questions evaluation does not exclude the application of other evaluation systems (oral, home papers, en route during laboratory works) and maybe the best idea is to combine all of them in order to give the final mark.

When evaluation does not take place in the same time for all students, the multiple-choice questions tests must be regenerated in order to avoid learning of answers.

The main aspects of generating multiple choice tests, such as classification system, database design and software implementation of a multiple choice examination system for general chemistry were discussed in a previous paper [1].

Even if the multiple-choice examination is made on paper, it takes a considerable amount of time with evaluation. A better idea is to make an online interface for evaluation.

By using of a database server features, an online testing system that generate tests with 60 multiple choice examination items for general chemistry student level course was build. The tests was applies on first year undergraduate students in Material Engineering and Environmental Engineering from Technical University of Cluj-Napoca, Romania.

Database Design

The implementation of multiple-choice questions evaluation system uses a relational database that store chapter, section, and subject names, questions, and answers in separate tables. In order to store the examination results, the previous reported structure of the database [1] was completed with a new table, called `Exam`. A MySQL database server stores the database on vl.academicdirect.org server. The structure of the `Exam` table is depicted in figure 1:

|

id |

Int(11) primary_key auto_increment |

|||

|

IP |

Char(15) |

|

lquest |

Text |

|

name |

Char(255) |

|

lansw |

Text |

|

date |

Char(17) |

|

lmap |

Text |

|

dific |

Int(11) |

|

emap |

Text |

|

scor |

Int(11) |

|

lprob |

Text |

|

nquest |

Int(11) |

|

dat0 |

Char(17) |

Figure 1. General Chemistry (1) `Exam` table structure

The significance and stored values of the fields are the following:

· `id` field is an auto increment value field which is manipulated by database server and serves as primary key for unique identification of records in our programs;

· `IP` field store the remote IP address on which the test user was logged on;

· `name` field store the name of the user (the name of the student) which was logged in order to complete the test;

· `date` field store the server date and time on which the test was send to the client according with the format: yy.mm.dd HH:ii:ss where “yy” is in place of year, “mm” in place of month, “dd” in place of day, “HH” in place of hour, “ii” in place of minutes, and “ss” in place of seconds;

· `dific` field store the overall difficulty of the generated test; it can be a value from 160 to 175;

· `scor` filed store the overall difficulty of the client (student) solution, which cumulates the points corresponding of all correct answered question from the test; the field value are updated at the end of the test;

· `nquest` field store the number of correct answered questions from the test; the field value are updated at the end of the test;

· `lquest` field store the list of keys of questions which was included in the generated test (the keys are the primary keys from `Questions` table);

· `lansw` field store the list of keys of answers which was included in the generated test (the keys are the primary keys from `Answers` table);

· `lmap` field store the list of keys of answered questions (not all questions are obligatory); the `lmap` field contain always a subset of keys from `lquest` field;

· `emap` field store the list of keys of answers for answered questions (same remark as above); the `emap` field contain always a subset of keys from `lansw` field;

· `lprob` field store the keys of generated questions marked as problems in the questions table (require handwriting of solution);

· `dat0` field store the server date and time on which the solution of the test was received from the client according with the same format as `date` field;

Not all fields are already used; some of them are for future uses. Thus, the text type fields, ` lquest`, `lansw`, `lmap`, `emap` and `lprob` are for future exploiting of the test results. Future uses includes generating of statistics, such as most frequently correct answered questions, most frequent correct answered answers, most frequently wrong answered questions, and most frequent wrong answered answers, in order to take administrative and educational decisions, such as eliminating or modifying an question or an answer, or better explaining at course, seminaries and practical works the implied phenomena.

Software Implementation

The database administrative software includes the programs for inserting and modifying questions and answers and is accessible via the address:

http://vl.academicdirect.ro/general_chemistry/evaluation/admin/

The pathway is password protected and is manipulated only by the system administrator.

The online test generation software includes the programs for tests generation and is accessible via the address:

http://vl.academicdirect.ro/general_chemistry/evaluation/online/

Note that the access to the test is not possible from anywhere, and is possible to access the online test only from a list of ten computers (by checking of IP remote addresses), specified in our program, and the computers are in our evaluation classroom.

Online Testing Software

The online testing software consists from a set of four PHP programs, all placed in our “online/” directory: index.php, online0.php, online1.php, and online2.php, which accomplish following tasks:

· index.php - display a form on which the client user (the student) must fill his (here) first and last name;

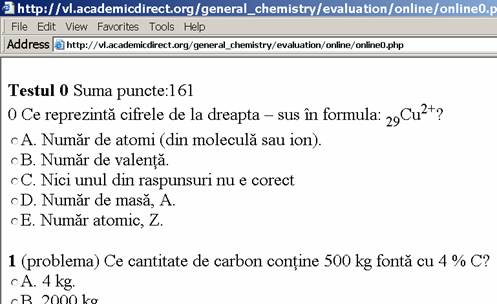

· online0.php is responsible with test generation; It displays the test (figure 1);

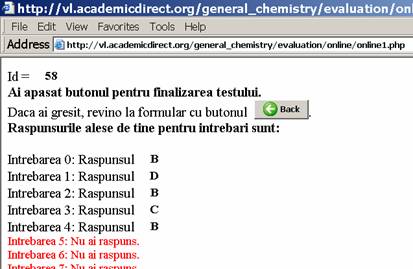

· online1.php display a list with user answers for last time checks (figure 2);

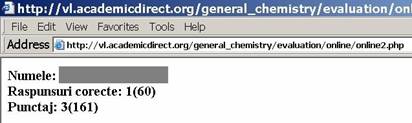

· online2.php completes the test and record the results (figure 3).

Some security issues were included in our programs. Thus:

· the programs communicates through POST method:

<form method='post' action='online1.php'>

· client IP address is checked:

$ippp = getenv("HTTP_X_FORWARDED_FOR");

$ip_este = 0;

if ($ippp == "XXX.XXX.XXX.XX0") $ip_este = 1;

…

if ($ippp == "XXX.XXX.XXX.XX9") $ip_este = 1;

if (!$ip_este) die("No access, ".$ippp);

· referrer program is checked on same manner as client IP address using:

$ref_p = getenv("HTTP_REFERER");

Figure 2. “online0.php” program output demo

Figure 3. “online1.php” program output demo

Figure 4. “online2.php” program output demo

The “online0.php” generates the test based on an original algorithm described in a previous paper [1]. After test generation, collects the user data:

· User IP: $IP = getenv("HTTP_X_FORWARDED_FOR");

· User name: $name = ucwords(strtolower(trim($_POST['name'])));

· Start date and time: $datetime = date("y.m.d H:i:s", time());

and collects the generated data (questions and answers keys):

· Retrieve from database a question data using the SQL query:

SELECT * FROM `Questions` WHERE `id`='$sir[$i][0]' LIMIT 1

· Retrieve from database for a question the answers data using the SQL query:

SELECT * FROM `Answers` WHERE `id`='$sir[$i][0]' LIMIT 1

· Retrieve from database for a question the correct answers keys using the SQL query:

SELECT `an` FROM `Corect` WHERE `qu`='$id_q' ORDER BY `an` DESC

· Prepare the value for `lquest` field by imploding the array of keys values of the selected questions into a string;

· Prepare the value for `lansw` field by imploding the array of keys values of the selected answers into a string;

· Prepare the value for `lprob` field by imploding the array of keys values of the questions from the selected questions which have set the `problema` field in `Questions` table into a string;

· Calculate the value of test difficulty (which will be stored into `dific` field) by summing all the `puncte` field values from all retrieved questions.

Finally, send the test to the client, and send to the database server the user and test information using the following SQL query:

INSERT INTO `Exam`

(`id`,`IP`,`name`,`date`,`dific`,`lquest`,`lansw`,`lmap`,`lprob`) VALUES

('','$IP','$name','$date','$dific','$lquest','$lansw','$lmap','$lprob')

The “online1.php” program displays the selected answers for every answered question from the test:

for ($i = 0; $i < 60; $i++) {

if(array_key_exists("q".$i,$_POST)) {

echo(" … $i … $_POST["q".$i] … ");

//at question $i you answered with $_POST["q".$i]

} else echo(" …$i …"); //at question $i you does not answered

The “online2.php” program makes updates on user record at the end of the test by following steps:

· Select all user and test data from database using the user id:

SELECT * FROM `Exam` WHERE `id`='$_POST['id']' LIMIT 1

· Reconstruct the questions and answers keys using the lists of keys from `lquest` and `lansw` fields;

· Construct and send to the database server the user answers list from POST data using a similarly algorithm with the described one for “online1.php” program, by using the SQL query:

UPDATE `Exam` SET `emap`='$emap' WHERE `id`='$_POST['id']'

· Cumulate the number of correct answered questions and number of earned points by querying the `Questions` table for points by using the SELECT phrase and send to the database server by using of UPDATE phrases:

SELECT `puncte` FROM `Questions` WHERE `id`='$quest[$i]' LIMIT 1

UPDATE `Exam` SET `nquest`='$quest' WHERE `id`='$_POST['id']'

UPDATE `Exam` SET `scor`='$scor' WHERE `id`='$_POST['id']'

· Finally set the end of test date and time:

UPDATE `Exam` SET `dat0`='date("y.m.d H:i:s", time())'

WHERE `id`='$_POST['id']'

Daily Report

At the end of an examination day (or anytime during the examination day) a program, which display the evaluations results, are welcome. We develop also a program, called marks.php which query the `Exam` table for `name`, `dific`, `scor`, `nquest`, `date`, and `dat0` fields values and display all records and do this task, according to:

SELECT `name`, `dific`, `scor`, `nquest`, `date`, `dat0` FROM `Exam`

WHERE `date` LIKE 'date("y.m.d")' ORDER BY `name`, `date`

Results

The multiple choice evaluation tests were applied on two groups of undergraduate students, which took the same course in Materials Engineering (3812 and 3813) for the General Chemistry course, first semester of study.

From every group of students a subgroup was extracted on pass examination criteria (3812-passed and 3813-passed). The numbers of points obtained by all tested students by group are in table 1. The underlined values from table 1 represent the pass examination subgroup (which includes also an oral examination). The statistical results for numbers of correct answers obtained after evaluation for the entire groups and on subgroups (table 2) are presented.

Table 1. Experiment results

Numbers of correct answered questions and points percentages by groups of students

|

Group |

Numbers of correct answered questions (NCAQ) |

|

3812 |

28, 26, 27, 21, 33, 40, 28, 30, 20, 9, 9, 29, 28, 29, 30, 24, 19 |

|

3813 |

9, 17, 22, 40, 26, 10, 9, 23, 21, 19, 16, 43, 13, 30, 21, 14, 33, 17, 8, 31, 17, 30, 14, 15, 17, 22 |

|

Group |

Points percentages (PP) |

|

3812 |

42, 42, 49, 36, 47, 65, 47, 44, 35, 14, 14, 51, 43, 46, 44, 32, 35 |

|

3813 |

14, 25, 30, 68, 41, 14, 13, 36, 37, 25, 24, 70, 19, 48, 36, 26, 52, 26, 11, 49, 31, 49, 20, 18, 29, 32 |

Table 2. Statistical results of evaluation on groups and subgroups

|

No |

Group |

Students |

NCAQ Mean |

NCAQ SE |

PP Mean |

PP SE |

|

3812 |

17 |

25.3 |

1.91 |

40.4% |

3.03% |

|

|

2 |

3813 |

26 |

20.7 |

1.78 |

32.4% |

3.06% |

|

3 |

3812-passed |

9 |

29.67 |

1.67 |

46.56% |

2.68% |

|

4 |

3813-passed |

4 |

31.75 |

5.66 |

50% |

10.98% |

The obtained scores on groups and subgroups allow us to make some statistical interpretations. A good guide for this task is [2] and the recommendations from the referred book we also use.

Noting with A the first sample and with B the second sample from the entire population, the used formulas are:

![]() ,

, , F(A,B) = SE(A)2/SE(B)2,

, F(A,B) = SE(A)2/SE(B)2,

(1-α)CI(A,B) = (M(A)-M(B)) ± t((1-α)/2,df(A,B))∙SE(A,B)

where:

· N(A) and N(B) are samples sizes;

· M(A) and M(B) are averages of samples values;

· Var(A) and Var(B) are biased variances of samples values;

· Var(A,B) is unpooled standard error of the difference of means;

· df(A,B) are degrees of freedom formula of (A, B) population;

· F(A,B) is the two-tailed F test;

· t((1-α)/2,df(A,B)) is the inverse of the Student’s t distribution;

· (1-α)CI(A,B) is the confidence interval for the difference between means.

Note that if F(α/2, N(A)-1,N(B)-1) < F(A,B) < F(1-α/2, N(A)-1, N(B)-1) then with a “α” probability is no difference between variances of samples, where F(∙,∙,∙) is the inverse of the F probability distribution. More, if (1-α)CI(A,B) includes the “0” value, then with a “α” probability is no difference between means.

Using data from tables 1 and 2 and above formulas for t and F tests result the statistics from table 3 and 4.

Table 3. Differences between averages with α=5% probability of wrong

|

(A,B) |

SE(A,B) NCAQ/PP |

df(A,B) NCAQ/PP |

M(A)-M(B) NCAQ/PP |

t(0.025,df(A,B)) NCAQ/PP |

95%CI(A,B) NCAQ/PP |

Difference NCAQ/PP |

|

(1,2) |

2.61/4.31 |

37.67/39.19 |

4.60/8.00 |

2.34/2.33 |

[-1.50, 10.70] / [-2.04, 18.04] |

No/No |

|

(1,3) |

2.54/4.05 |

22.97/22.85 |

-4.37/-6.16 |

2.41/2.41 |

[-10.47, 1.73] / [-15.89, 3.57] |

No/No |

|

(1,4) |

5.97/11.39 |

3.71/3.47 |

-6.45/-9.60 |

4.18/4.18 |

[-31.40, 18.50] / [-57.17, 37.97] |

No/No |

|

(2,3) |

2.44/4.07 |

25.83/27.50 |

-8.97/-14.16 |

2.38/2.37 |

[-14.79, -3.15] / [-23.81, -4.51] |

Yes/Yes |

|

(2,4) |

5.93/11.40 |

3.62/3.48 |

-11.05/-17.60 |

4.18/4.18 |

[-35.83, 13.73] / [-65.21, 30.01] |

No/No |

|

(3,4) |

5.90/11.30 |

3.54/3.36 |

-2.08/-3.44 |

4.18/4.18 |

[-26.73, 22.57] / [-50.64, 43.76] |

No/No |

Table 4. Differences between variances with α=5% probability of wrong

|

(A,B) |

F(A,B) NCAQ/PP |

F(0.975, N(A)-1,N(B)-1) |

F(0.025, N(A)-1,N(B)-1) |

Difference NCAQ/PP |

|

(1,2) |

1.151/0.980 |

0.383 |

2.384 |

No/No |

|

(1,3) |

1.308/1.278 |

0.320 |

4.076 |

No/No |

|

(1,4) |

0.114/0.076 |

0.245 |

14.232 |

Yes/Yes |

|

(2,3) |

1.136/1.304 |

0.363 |

3.937 |

No/No |

|

(2,4) |

0.099/0.078 |

0.271 |

14.115 |

Yes/Yes |

|

(3,4) |

0.087/0.060 |

0.185 |

14.540 |

Yes/Yes |

Discussions

The use of multiple choice examination items tests allow to cover a large number of subjects from the entire course. In our case, the test covers always all chapters and a percent of 74% from the total number of subjects (60 from 81).

The extraction of a reduced number of questions (60 from 270 questions in our database), a reduced number of questions (240 from 1376 answers in our database) randomization of questions order and answers belonging to a question order make almost impossible the learning of answers. Even with all questions and answers published [3], the students obtained results demonstrate this assumption.

The results open an interesting discussion, sustained by a 5% probability of wrong (tables 3 and 4).

Comparing the entire groups (1 and 2) can be seen that there is a difference between averages, but not with a 5% probability of wrong statistically significant.

Looking to the group 1 and its subgroup 3 there is a difference between averages, but not with a 5% probability of wrong statistically significant, could be observed.

An obvious difference between means (which is at this time significant) appears between the entire group 2 and subgroup 3 of group 1 (students that passed the exam).

The variances of groups 1 and 2 and subgroup 3 are approximately the same, and are far to be significantly different.

Even if is a statistically significant difference between averages of 2 and 3 is not relevant for practice, because they derive from different selections.

Inspecting table 4 concerning subgroup 4, results that this has statistically significant different variance comparing to all other. It means that there are high differences between levels of general chemistry knowledge of students that passed the exam from group 2. The values from table 3 concerning subgroup 4 shows that there is no difference between averages with all others.

Conclusions

The use of MySQL database server combined with an easy to use programming language (such as PHP) and output interface (such as HTML) make the task of multiple choice examination system creating an easy one. More, any update is easy to make.

A statistical interpretation of multiple choice examination tests results can reveal interesting aspects about the level of knowledge on different groups of students, which can lead to educational issues applicable on practical works and seminaries.

References